Coronavirus: Social media 'spreading virus conspiracy theories'

- Published

Unregulated social media platforms like Facebook and YouTube may present a health risk to the UK because they are spreading conspiracy theories about coronavirus.

That's the conclusion of a peer-reviewed study published in the journal Psychological Medicine, which finds people who get their news from social media sources are more likely to break lockdown rules.

The research team from Kings College London suggests social media news sites may need to do more to regulate misleading content.

"One wonders how long this state of affairs can be allowed to persist while social media platforms continue to provide a worldwide distribution mechanism for medical misinformation," the report concludes.

The study analysed surveys conducted across Britain in April and May this year.

Facebook said it had removed "hundreds of thousands" of coronavirus posts that could have led to harm, while putting warning labels on "90 million pieces of misinformation" globally in March and April.

People were asked if they believed a number of conspiracy theories relating to Covid-19: that the virus was made in a laboratory, that death and infection figures were being manipulated by the authorities, that symptoms were linked to 5G radiation or that there was no hard evidence the virus even exists.

None of these theories has any basis in verifiable fact.

Those who believed such conspiracies were significantly more likely to get their news from unregulated social media. For example, 56% of people who believe that there's no hard evidence coronavirus exists get a lot of their information from Facebook, compared with 20% of those who reject the conspiracy theory.

Sixty percent of those who believe there is a link between 5G and Covid-19 get a fair amount or great deal of their information on the virus from YouTube. Only 14% of those who reject the theory are regular YouTube users.

And 45% of people who believe Covid-19 deaths are being exaggerated by the authorities get a lot of their news on the virus from Facebook, more than twice the 19% of non-believers who say the same.

"There was a strong positive relationship between use of social media platforms as sources of knowledge about Covid-19 and holding one or more conspiracy beliefs," the study finds. "YouTube had the strongest association with conspiracy beliefs, followed by Facebook."

Who starts viral misinformation... and who spreads it?

The research also found that people who have left home with possible Covid-19 symptoms were more than twice or three times as likely than those who haven't to get information about the virus from Facebook or YouTube.

People that admitted having had family or friends visit them at home were also much more likely to get their information about coronavirus from social media than those who have stuck by the rules.

The researchers conclude that there is a strong link between belief in conspiracy theories about the virus and risky behaviour during restrictions imposed to prevent its spread.

"Conspiracy beliefs act to inhibit health-protective behaviours," the study concludes, and "social media act as a vector for such beliefs."

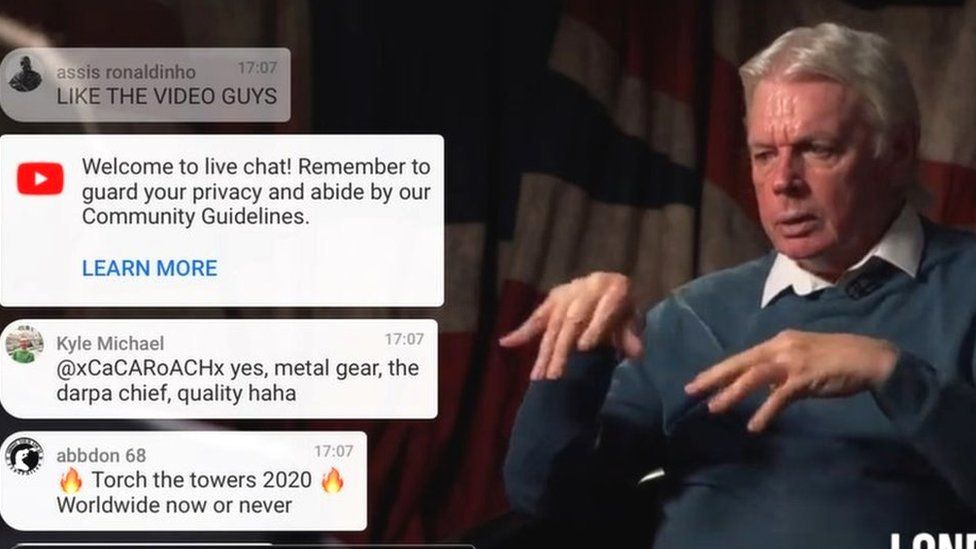

The report notes that when misinformation about Covid-19 was propagated by conspiracy theorist David Icke on the local London Live TV station, the UK broadcasting regulator Ofcom intervened.

London Live was sanctioned for content which "had the potential to cause significant harm to viewers".

YouTube and Facebook also deleted Mr Icke's official channels from their platforms and social networks insist they have made efforts to bring fake news about the coronavirus under control.

The study will be seized upon by those who believe social media companies like Facebook and YouTube owners Google should do more to control the publication of false information.

A Facebook spokesman said: "We have removed hundreds of thousands of Covid-19-related misinformation that could lead to imminent harm including posts about false cures, claims that social distancing measures do not work, and that 5G causes coronavirus.

"During March and April, we put warning labels on about 90 million pieces of Covid-19-related misinformation globally, which prevented people viewing the original content 95% of the time.

"We have also directed more than 3.5 million visits to official Covid-19 information and lockdown measures from the NHS and the government's website, directly from Facebook and Instagram."

- SYMPTOMS: What are they and how to guard against them?

- GLOBAL SPREAD: Tracking the pandemic

- EUROPE LOCKDOWN: How is it being lifted?

- THE R NUMBER: What it means and why it matters

- STRESS: How to look after your mental health

- Published1 May 2020

- Published2 May 2020

- Published20 April 2020