Coronavirus: The inside story of how UK's 'chaotic' testing regime 'broke all the rules'

Insiders reveal that data collection was haphazard, as officials went against accepted practice and "buffed the system".

Friday 10 July 2020 10:50, UK

As Britain sought to assemble its coronavirus testing programme, all the usual rules were broken.

In their effort to release rapid data to show the increase in testing capacity, officials from Public Health England (PHE) and the Department of Health and Social Care (DHSC) "hand-cranked" the numbers to ensure a constant stream of rising test numbers were available for each day's press conference, Sky News has been told.

An internal audit later confirmed that some of those figures simply didn't add up.

According to multiple sources, the data collection was carried out in such a chaotic manner that we may never know for sure how many people have been tested for coronavirus.

"We completely buffed the system," says a senior Whitehall figure.

"We said: forget the conventions, we're putting [this data] out."

Sky News has learned that in the early days of mass COVID-19 testing, the statistical problems were so deep that one minister sat at their desk with Excel spreadsheets in front of them, calling round to try to collect data to use in each daily press conference.

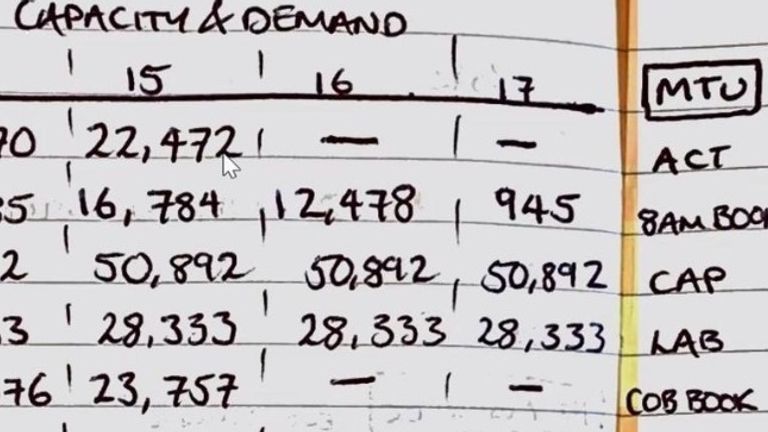

Even as Health Secretary Matt Hancock struggled to get the number of tests carried out up to 100,000 a day by the end of April, the collection of those testing statistics was still so primitive that they were being compiled with pen and paper.

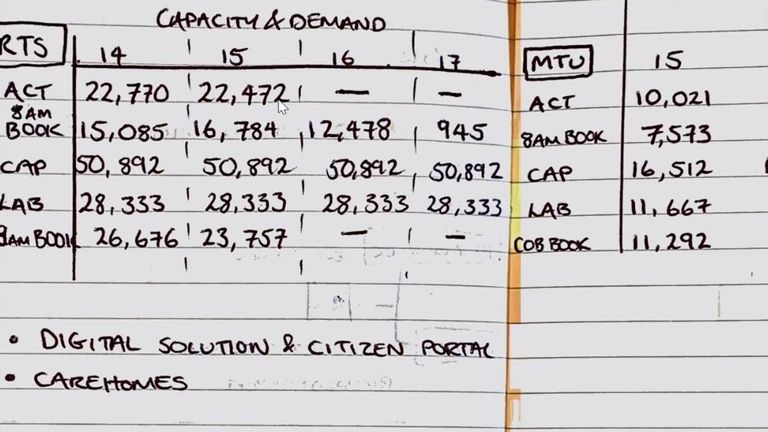

Sky News has uncovered hand-written tables of testing data, allegedly from mid-May, which show national testing figures for different parts of the operation.

The government is still struggling to get a handle on the testing data.

Late last week, it admitted that there was significant double-counting in those early testing statistics, saying the total number of cases in the UK was 30,000 fewer than its original data claimed.

Over the weekend, it said that it would no longer publish a daily estimate of how many people had been tested for the disease.

And while it has insisted that many of these problems are historic and have now been addressed, question marks over the data on testing continue to bedevil the government.

On Tuesday and Wednesday, it was forced to revise its estimate of the number of tests carried out yet again, eliminating another 10,000 tests which had been double-counted in the original data, then adding back another 20,000 tests yesterday.

It is the latest chapter of a fraught saga which goes back to the early days of the pandemic.

It is a saga which can now be told in the fullest detail yet.

This account is a product of dozens of conversations with individuals involved in the testing programme, including practitioners, managers, technologists and government officials - most of whom spoke on condition of anonymity.

The picture that emerges is of government departments, agencies and their consultants struggling to navigate a maze of different computer systems, and a Byzantine pathology sector, of flawed decision-making based on targets rather than sustainable systems.

The upshot was that in its early months, both the testing system and the data produced by it were simply "not fit for purpose", according to one senior official.

That matters because testing, and the data produced by a testing programme, are a crucial part of the response to the pandemic. Without a quick, reliable testing programme Britain will struggle to combat any future upsurge in COVID-19 cases.

Without the data that comes from it, authorities will struggle to assemble their response, be that local lockdowns or the administration of forensic quarantine programmes.

If that data is not trusted, there is no guarantee that those lockdowns will be obeyed. In short, the programme and its data are the most potent defence against a second wave of coronavirus cases.

For an illustration, one need only look at Leicester, where the lockdown has been extended locally because of an upsurge in cases in the city.

Such local lockdowns are likely to become more commonplace in the coming months as public health officials battle flare-ups. However, the Leicester episode also underlined another ongoing problem: a lack of clear, quick data.

Local officials said they had only been provided with postcode-level data on cases on 25 June, just days before the lockdown was announced.

And while DHSC insists that some postcode-level data on cases was provided to local authorities on an ad hoc basis from mid-June, and stresses that it has worked closely with Leicester City Council, the episode underlines the continuing struggles over data as the government works to unpick the errors baked into the system at the start.

Government sources insist the system is improving, pointing to small but significant changes in the way information is recorded and reported.

Late last week, NHS Test and Trace was able to announce some statistics on turnaround times for tests. For the first time since May it is now publishing the number of people being tested, albeit on a weekly rather than a daily basis, and without providing a cumulative number since the outbreak of the disease.

Some of the early double counting is being gradually removed, but DHSC has acknowledged that there is more to come. In its update to the statistics yesterday it said: "Due to data not being made available, it's likely that pillar 2 numbers for the 7 July are over-reported. The figures will be revised, once the necessary data has been made available this week."

It is the latest evidence of the difficulties officials continue to have in putting numbers on the test and trace system, which was assigned a further £10 billion of funding by the Chancellor this week.

There have been concerns with Britain's testing data for some time.

Last month, Sir David Norgrove, the chair of the UK Statistics Authority, wrote to Mr Hancock and warned that "the figures (on testing) are still far from complete and comprehensible".

He told him: "The way the data are analysed and presented currently gives them limited value... The aim seems to be to show the largest possible number of tests, even at the expense of understanding."

But it turns out the problems with data over-testing went far deeper than is evident from the Statistics Authority letter.

They were a consequence of a system which, according to Sky News sources, was born out of chaos, where little thought was given to the kinds of data collection practices which are expected of most government enterprises.

Those problems began early on in the pandemic, with two fateful decisions about the way Britain's testing infrastructure would be arranged.

When news first broke of the virus in China at the turn of the year, the working plan at DHSC was to rely on PHE and its existing network of testing facilities.

Britain's pathology sector is not lavishly funded, but it has tried and tested practices that ensure results are delivered quickly and are usually fed back into medical records.

However, in the first three months of the year, PHE struggled to increase testing capacity at its labs - to the enormous frustration of central government.

"It was a risk," says one senior source.

"We bet on red. And it came up black. The Germans were lucky. They had lots of machines lying around and a system in place. We had next to nothing.

"This country's pharmaceutical industry has a real advantage when it comes to 'high science' - blockbuster drug development, biotech and so on - the sexy stuff. But we're far less focused on the unsexy end: diagnostics, where we tend to buy the kit in from abroad.

"That was a massive problem. Many of the NHS pathology labs are effectively cottage industries, cooking up tests by the day. They weren't equipped for what was coming their way."

Many working in NHS pathology labs reject that characterisation.

They argue that if the government had turned to them, then testing would be in a far better position - and point to their successes as proof.

Others working in academic labs say they offered their services to the government and PHE to push up testing capacity.

But in March, the government decided to reject those advances and set up an entirely separate network of testing sites and facilities, run in large part by commercial partners.

It was a bold, disruptive move in the face of what was seen as foot-dragging by PHE and NHS labs, but multiple sources from inside government, from labs and from the technology sector told Sky News it was this move more than any other that set in motion the problems with testing results and data that have plagued the system ever since.

Leaving PHE in charge of the "core" tests for hospitals and key health workers, which it now labelled "Pillar 1", it conceived of a mammoth new testing programme for the general public, with a network of "Lighthouse Laboratories" around the country.

This centralised testing scheme was named "Pillar 2" and would encompass home-testing kits and drive-in and walk-in centres around the country.

Having made testing an internal priority, on 2 April the health secretary doubled down with a public pledge to raise the number of daily tests to 100,000.

At that stage there were barely more than 10,000 tests a day being carried out, but Mr Hancock gambled that with the new Pillar 2 system being hastily set up, it was just possible to meet the target by the end of the month.

That target was the second fateful decision: from that point most of the focus in Whitehall was on hitting 100,000 as quickly as possible.

In the face of the unprecedented outbreak, less thought and time than usual was given to putting in place the systems and safeguards that would ensure test results could be robustly reported and tabulated into the data that would help inform Britain's response to COVID-19.

Quantity trumped quality.

A host of departments and consortia were engaged.

Deloitte was contracted to set up the booking system - everything from the digital infrastructure (websites, portals and booking systems) to the coding that would associate an individual with their testing vial.

Amazon was brought in to manage logistics and the army was asked to help out in operating some of those test centres.

It was a gargantuan operation which did indeed push up testing numbers rapidly, but as it expanded, those involved found it even more difficult to keep track of the crucial statistics.

Even in the absence of human error, managing a testing programme is a demanding logistical challenge, involving a chain with many points of fragility.

Consider a home testing kit. Nearly three million of these tests have been mailed out to households around the UK.

Each contains a swab, a vial, some packaging, and a lengthy 16-page booklet of instructions about how to self-administer the test.

Having taken a sample from their throat and nose, the individual must then attach a barcode to the vial the correct way round, put it in the packaging and send it back to one of the Lighthouse Labs.

Since the tests are time-sensitive, if it doesn't arrive within 48 hours the results could be invalid.

When the vial reaches the test centres another crucial chain of events begins: the vial needs to be assigned to a specific place in the polymerase chain reaction (PCR) machine, where the test itself takes place.

Sometimes mistakes happen at this stage: the vials are dropped or contaminants are introduced.

Sometimes tests have to be reprocessed.

But even if the test is done perfectly and the result is delivered quickly, without the associated data it quickly loses meaning.

According to one senior source, "we had problems not just with capacity but creating an end-to-end process".

They said: "Deloitte were amazing and threw together something extraordinary in weeks.

"But in the early days there's no doubt it was chaotic. A bag of tests would arrive on a given night and we wouldn't know where it came from".

Deloitte, whose consulting arm has worked on similar schemes in other countries, created a database which would collect as many of these datapoints as possible - down to whether a vial was dropped or spoiled.

According to insiders, it now connects directly to labs - via a data transfer system called the National Pathology Exchange (NPEx) - so that data is in the hands of the NHS.

But that arrangement took time to get up and running, and even in recent weeks doctors say they have struggled to get the data they need.

Tom Lewis, a doctor who oversees testing at North Devon Healthcare NHS Trust, says that when tests are carried out by his own labs the results come back within 12 hours.

Most importantly, those results are tied to crucial data points: they are filed alongside each person's unique NHS number, forming a permanent, traceable record of their coronavirus status.

By contrast, when tests are done by the national system, the results take far longer to arrive, in large part because the tests need to be transported to the Lighthouse Labs before processing.

Worryingly, those crucial data points, including links with an NHS number, are not routinely collected - making it extremely difficult to reconcile the test results with a given individual and their accompanying medical records.

This helps explain why DHSC was unable for many weeks to publish data for the number of people tested in England.

Dr Lewis said the data problems are particularly acute when he attempts to get confirmation of test results for his staff from Pillar 2 centres.

In order to verify that a staff member has been tested, he has been forced to ask for screenshots, which he then enters manually into an Excel spreadsheet.

Handling an outbreak at a care home, he was unable to find out who had been tested and what the results were.

"You know things have happened and you don't know when and to whom," he says. "It's a mess, a total mess."

The number of tests conducted by North Devon Hospital are tiny compared with the national scheme, but Dr Lewis says it could have been the basis for something much bigger, if only the increased capacity was linked to existing infrastructure.

As evidence, he points to the fact that he sends some swabs to a larger teaching hospital in Exeter - but because each test is linked to an NHS number, it is recorded, traced, and made easily accessible as soon as it is done.

"The government system could have worked exactly the same way, if you had done it right," he says. "But instead they got management consultants and the military to sort it out."

Many of those Sky News has talked to referred back to that fateful decision to reject the offers of help from the existing lab community - both in hospitals and the academic sector - as one of the moments things started to go wrong.

Those in government say there was simply no other way to raise testing capacity comfortably into six figures, though they acknowledge that Pillar 1 testing has increased its capacity well beyond what anyone expected in March.

Even there, there were still problems as people scrambled to meet the health secretary's target.

"There were multiple test formats, multiple outputs, multiple different barcodes," said a senior manager in the Pillar 1 testing sector.

"We had one testing lab operating on one type of barcode system, hospitals on another, then another barcode system altogether in a different lab. These systems are extraordinarily complicated - and doing that at scale - well, it's no surprise there were so many problems.

"But the real problem was: there were too few people at the very top who understood the nature of the tests they were dealing with."

Still, while those working in the established testing sector had reliable ways of turning their results into overall data, Deloitte struggled to do so from the early days of the Lighthouse Labs of Pillar 2, according to one senior technologist who witnessed the testing scheme in operation.

"It was literally done on pen and paper; we were startled by how primitive the data collection process was," they said.

"It was barbaric. There were hundreds of people working on this new system, yet the national test results were being literally hand-written."

Sky News has seen one of those hand-written tables of test results, compiling results from regional testing sites and mobile testing units around the country.

Those involved argue that staff may have written out testing data with pen and paper - but say that such notes were likely to have been backups for electronic records, which were collected manually before a digital platform was put in place in mid-April.

However, that hand-written table allegedly dates from mid-May, when the system was already up in place.

And in the rush to get figures out, there were few checks being made at the very top to ensure nothing was falling through the cracks.

"We were committed to publishing data on a daily basis," said a senior source at DHSC.

"None of it was properly audited. You can't throw up a new system in a few weeks and expect it to be perfect."

The Statistics Authority and Office for Statistics Regulation ask government statistics providers to try to follow the statistical code of conduct.

It stipulates: "Statistics must be the best available estimate of what they aim to measure, and should not mislead. To achieve this the data must be relevant, the methods must be sound and the assurance around the outputs must be clear."

Departmental insiders privately admit that it fell foul of this requirement.

"Normally when the government publishes data it takes three months to get the system approved," said the Whitehall figure.

"We didn't have that kind of time. So we completely buffed the system. We said: 'Forget the conventions, we're putting things out'. We didn't want to be accused of a lack of transparency.

"In doing so we probably broke some of the rules. But I don't think there was some wild cover-up."

Sky News has been told that while ministers were well aware of these problems with the data, they were nonetheless actively pushing to get that data released.

In the early days of the programme, one minister themselves phoned around to get numbers to add to Excel spreadsheets to produce each day's testing figure.

Come the end of April, Mr Hancock was able to claim that his target of 100,000 tests per day had been carried out - but only because the DHSC included thousands of tests that had been mailed out to households around the country.

The target was met but at some cost, with the majority of the test kits sent out to households not returned for processing.

According to DHSC data, some 4 million kits have been sent out since late April, but 2.6 million tests have not yet been processed - around two in three of every home kit.

Even after the target had been hit, officials pushed on, producing statistics each day on the number of tests and the number of people tested.

Then, on 23 May, DHSC abruptly stopped publishing any numbers on how many people had been tested.

It removed the data series from its website, saying: "Due to technical difficulties with data collection we cannot provide people tested figures today."

This week it confirmed that it would no longer produce those statistics each day, pointing people towards a weekly measure of people tested from the new Test and Trace programme instead.

However, it still has yet to produce a cumulative number for people tested.

In May, DHSC recruited Baroness Dido Harding, former chief executive of telecom company TalkTalk, to manage the NHS Test and Trace programme.

Following the series of letters with the Statistics Authority, she undertook to improve the collection of statistics - and, in recent weeks, new information has started to trickle out.

Gaps remain. Test and Trace is not able to say how fast many tests in care homes are done, for instance, as these are conducted by pharmaceutical company Randox, which uses a different system to manage its records.

Yet insiders report a slow but steady improvement in the flow of data through the system.

However, there are still serious gaps. Asked whether it knew how many people had been tested in total since the disease reached the UK, DHSC said more than 11 million tests had been delivered, with a capacity to carry out more then 300,000 a day.

Asked repeatedly to respond to the main claims in this piece, about problems with data, about poor standards of collection and double counting, it added: "Throughout the pandemic, we have been transparent about our response to coronavirus and are always looking to improve the data we publish, including the way we update testing statistics.

"We have engaged with the Office of National Statistics and the Office for Statistics Regulation on our new approach to these publications and will continue to work closely with them as we develop these figures."

Deloitte, meanwhile, declined to comment.

But inside the department, there is an acknowledgement that for all the recent improvements in data gathering, the early mistakes may never be erased.

As one senior insider put it: "There's a growing recognition that we may never know for sure what happened with many of those early tests.

"We will probably never know how many people have been tested for the virus."

In the wake of this article's publication, DHSC published a new data series of the number of people tested for Coronavirus going back to January.

These new data shows that the cumulative number of people tested between the end of January and late May was just under 2 million, split evenly between pillars one and two.